- If you have no .ssh directory in your home directory, ssh to some other machine in the lab; then Ctrl-d to close the connection, creating .ssh and some related files.

- From your home directory, make .ssh secure by entering:

chmod 700 .ssh

- Next, make .ssh your working directory by entering:

cd .ssh

- To list/view the contents of the directory, enter:

ls -a [we used ls -l]

- To generate your public and private keys, enter:

ssh-keygen -t rsa

The first prompt is for the name of the file in which your private key will be stored; press Enter to accept the default name (id_rsa).The next two prompts are for the password you want, and since we are trying to avoid entering passwords, just press Enter at both prompts, returning you to the system prompt.

- To compare the previous output of ls and see what new files have been created, enter:

ls -a [we used ls -l]

You should see id_rsa containing your private key, and id_rsa.pub containing your public key.

- To make your public key the only thing needed for you to ssh to a different machine, enter:

cat id_rsa.pub >> authorized_keys

[The Linux boxes on our LAN, soon to be cluster, have IPs ranging from 10.5.129.1 to

10.5.129.24 So, we copied each id_rsa.pub file to temp01-temp24 and uploaded these

files via ssh to the teacher station. Then we just ran cat tempnn >> authorized_keys

for each temp file to generate one master authorized_keys file for all nodes that we could

just download to each node's .ssh dir.]

- [optional] To make it so that only you can read or write the file containing your private key, enter:

chmod 600 id_rsa [optional] To make it so that only you can read or write the file containing your authorized keys, enter: chmod 600 authorized_keys

InstantCluster Step 5: Software Stack II (Meeting V)

We then installed openMPI (we had a lot less dependencies this year with Natty 11.04 64bit) and tested multi-core with flops. Testing the cluster as a whole will have to wait until the next meeting when we scale the cluster! We followed openMPI install instructions for Ubuntu from http://www.cs.ucsb.edu/~hnielsen/cs140/openmpi-install.html

These instructions say to use sudo and run run apt-get install openmpi-bin openmpi-doc libopenmpi-dev However, the way our firewall is setup at school, I can never update my apt-get sources files properly. So, I used http://packages.ubunutu.com and installed openmpi-bin, gfortran and libopenmpi-dev. That's it! Then we used the following FORTRAN code to test multi-core. FORTRAN, really? I haven't used FORTRAN77 since 1979! ...believe it or don't!

We compiled flops.f on the Master Node (any node can be a master):

and tested openmpi and got just under 800 MFLOPS using 2 cores (one PC):

Next, we generated a "machines" file to tell mpirun where all the nodes (Master and Workers) are (2 PCs or nodes with 2 cores each for example):

mpirun -np 4 --hostfile machines flops

Note: last year we got about 900 MFLOPS per node. This year we still have 64bit AMD athlon dualcore processors. However, these are new PCs, so these athlons have slightly different specs. Also, last year we were running Maverick 10.04 32bit ... and ... these new PCs were supposed to be quadcores! We are still awaiting shipment.

InstantCluster Step 6: Scaling the cluster

UPDATE: 2011.1126 (Meeting VI)

Including myself, we only had 3 members attending this week. So, we added 3 new nodes. We had nodes 21-24 working well last time. Now we have nodes 19-25 for a total of 7 nodes, 14 cores and over 5 GFLOPS! This is how we streamlined the process:

(1) adduser jobs and login as jobs

(2) goto http://packages.ubuntu.com and install openssh-server from the natty repository

(3) create /home/jobs/.ssh dir and cd there

(4) run ssh-keygen -t rsa

(5) add new public keys to /home/jobs/.ssh/authenticated_keys to all nodes

(6) add new IPs to /home/jobs/machines to all nodes

(7) goto http://packages.ubuntu.com and install openmpi-bin, gfortran and libopenmpi-devfrom the natty repository

(8) download flops.f to /home/jobs from our ftpsite compile and run:

mpif77 -o flops flops.f and

mpirun -np 2 flops or

mpirun -np 4 --hostfile machines flops

NB: since we are using the same hardware, firmware and compiler everywhere, we don't need to recompile flops.f on every box, just copy flops from another node!

(9) The secret is setting up each node identically:

/home/jobs/flops

/home/jobs/machines

/home/jobs/.ssh/authenticated_keys

UPDATE: 2011.1214 (Meeting VII)

We had 5 members slaving away today. Nodes 19-25 were running at about 5 GFLOPS last meeting. Today we added nodes 10, 11, 12, 17 and 18. However, we ran into some errors when testing more than the 14 cores we had last time. We should now have 24 cores and nearly 10 GFLOPS but that will have to wait until next time when we debug everything again....

===================================================

What we are researching IV

(maybe we can use Python on our MPI cluster?):

What we are researching III

(look at some clustering environments we've used in the past):

We used PVM PVMPOV in the 1990s.

openMOSIX and kandel were fun in the 2000s.

Let's try out openMPI and Blender now!

What we are researching II

(look what other people are doing with MPI):

What we are researching I

(look what this school did in the 80s and 90s):

Thomas Jefferson High courses

Thomas Jefferson High paper

Thomas Jefferson High ftp

Thomas Jefferson High teacher

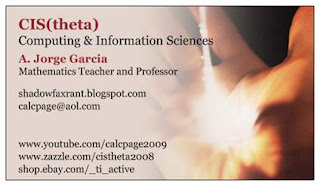

CIS(theta) 2011-2012 - Scaling the Cluster! - Meeting VII

CIS(theta) 2011-2012: GeorgeA, GrahamS, KennyK, LucasE; CIS(theta) 2010-2011: HerbertK

Chapter 4: Building Parallel Programs (BPP) using clusters and parallelJava

===================================================

Well, that's all folks, enjoy!