CIS(theta) 2019-2020

COVID19 June "Meeting" #15 (6/15/2020):

Let's Scale Up That Cluster!

If it weren't for the COVID19 Pandemic, this would have been our last meeting and we would have added more hardware scaling from 4 cores to 8 cores, 16 cores or more! We've been religated to 4 cores until now as we were only using one Raspberry PI. We wanted to see how well our little Beowulf Linux Cluster would do with 2 or 4 or maybe even 8 Raspberry PIs. Since school is shut down, we won't be able to accomplish this step of our project. So have a look at how this would work in the following videos and have a great summer!

CIS(theta) 2019-2020

COVID19 May "Meeting" #14 (5/15/2020):

Let's Fire Up Mandel_MPI.py!

CH12: MAY READING

This month let's see if we can get Mandel_MPI.py running on 4 cores of one Raspberry Pi! See slides 43-52 below:

CIS(theta) 2019-2020

COVID19 April "Meeting" #13 (4/15/2020):

How Efficient Is Mandel_Serial.py?

CH11: APRIL READING

Our campus is closed! So we're not having a real meeting due to the COVID-19 scare of 2020. Instead, I took the best of the 3 versions of Mandel_Serial00.py, Mandel_serial01.py, Mandel_Serial02.py and created Mandel_Serial03.py!

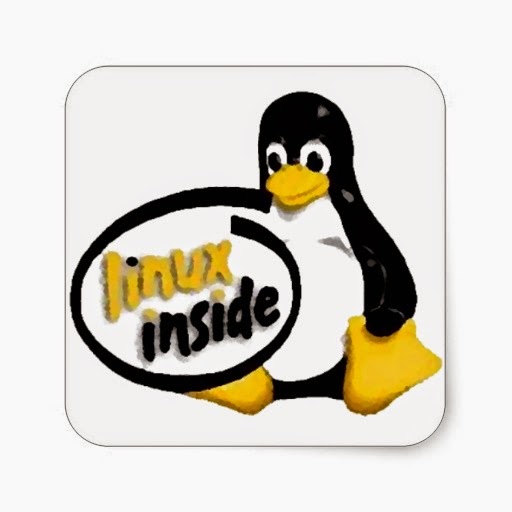

I ran Mandel_Serial03.py generating several plots of the Mandelbrot Fractal with varied resolutions and color depths, aka dwells, on a single Raspberry PI. As you can see in my outputs below, the efficiency of each prgram run degrades significantly as the resolution or dwell are increased slightly. Hence the need for a multicore solution! We'll try our hands at an MPI version next month. For now, see if you can reproduce my outputs as shared below. Don't forget to read CH11 linked above!

Note that I changed permissions on Mandel_Serial03.py using chmod 755 so I could run it from the commandline as an executable file! Next time we'll write an MPI version and see what happens to the efficiency of rendering our plots with 1, 2 and 4 cores.

I'm afraid that will be the end of our project this year as we can't scale the hardware now that our campus is off limits for the forseeable future! Further, the last stage of our project, after scaling the hardware, would have been to zoom in on different sections of the Mandelbrot Fractal and maybe even create a Mandel Zoom animated movie!

CIS(theta) 2019-2020

COVID19 Meetings #11-12 (3/18-3/25):

What About Mandelbrot Fractals 2?

CH10: MARCH READING

We had to cancel our March meetings due to the COVID19 Pandemic. So, please just read chapter 10 linked above and get the following version of our mandel.py program working on your RPI! This is the version that runs in SAGE, here's the version that runs on the RPI with commandline input. Neither version uses MPI as of yet, let's save that for our next virtual meeting in April.

Our May and June meetings may have to be cancelled entirely due to the COVID19 scare. During these meetings we were going to scale the cluster and add at least 4 more cores requiring engineering in close quarters at school.

commandline:

python mandel_serial02.py 2000 1500 32

Here's some sample output. These plots used 2000 vertical pixels by 1500 horizontal pixels (3 megapixels) with a max of 32 colors (12 megabytes per file). Our 32 color max makes the program run faster, however, the RGB color map uses 4 bytes of memory (8 bits each for Red, Green, Blue and Alpha aka transparency). Any more pixels or colors will make the plot render too slowly on SAGE and RPI. That's why we wanted to scale the cluster from 4 cores to 8 cores or more to experiment with enhanced performance!

CIS(theta) 2019-2020

Meeting #10 (2/26/20):

What About Mandelbrot Fractals?

CH09: FEBRUARY READING

Today we tried our hand at coding fractal graphics on the complex plane! We are evaluating z(n+1)=z(n)^2+c where c is a point on the complex plane and z(0)=0+0i or z(1)=c. The Mandelbrot Set consists of the points on the Complex Plane such that the magnitude of the Complex Vector is never greater than 2 units!

We use complex vector z(0) to calculate z(1), then we take z(1) to find z(2) and so on. I think of this as a recursion for every point in our complex plot that corresponds to a pixel on the screen. The base case is to stop this process if abs(z(n)) ever exceeds 2. The Mandelbrot set in the set of points where the magnitude never exceeds 2 but we cannot iterate infinitely many times per pixel!

Instead, we set a maxIteration value. If we hit that value, we'll pretend that's infinity and move on to the next pixel. Of course the plot will be more accurate if maxIteration is large. Also, the resolution of our plot will be better the more pixels we jam into the domain of our plot (xmin=-2, xmax=1, ymin=-1, ymax=1). As the number of iterations and pixels increases, the slower the rendering of our plot will be. That's where parallel programming in MPI comes in!

We also keep track of how many times we iterate before achieving the base case. If the count is maxIteration, we'll call that point a member of the Mandelbrot Set and color that pixel black. Otherwise, if we hit the base case before maxIteration, we map that count to a color other than black for that pixel.

What follows is our attempt in python on SAGE. This program generates a 2D array we called C[i,j] representing the counts for each pixel and then graph of those counts with one color for the Mandelbrot Set and another color for points outside the set.

Next month, March, we will get this program, mandel.py, working from the command line on the Raspberry PI, add more colors and MPI functionality to the code. April and May will be devoted to scaling the hardware of our cluster!

CIS(theta) 2019-2020

Meeting #8 (1/15/20) & Meeting #9 (1/29/20):

Time To Calculate PI!

CH08: JANUARY READING

We dedicated this month to translating the section of the FORTRAN program flops.f that calculates PI, by adding more and more Riemann Rectangles or more and more cores to the mix, into our own pythonic code. We came up with 3 versions of myPI_serial.py (meeting #8 end of semester 1) and 2 versions of myPI_mpi.py (meeting #9 start of semester 2). Please see code samples and screenshots below.

myPI_serial_v01.py simply calculates the Rieman Sum. We defined 2 functions: f(x)=4.0/(1+x^2) and another function, RSum(a,b,n), to sum all the rectangular areas using quadrature to get better and better estimates of PI. myPI_serial_v02.py adds a timer to the first version. Then myPI_serial_v03.py added commandline input as well as error estimates to our second version.

myPI_mpi_v04.py is actually from Lisandro Dalcin's presentation about mpi4py. We used Lisandro's version to get some more experience working with mpi4py. Our version of the final program, myPI_mpi_v05.py, combines all the MPI functionallity of Dalcin's version with our myPI_serial_V03.py's functions, timers and error estimation.

Note our confirmation of Amdahl's Law (see this month's reading, CH08) in the last two screenshots. As we added more and more rectangles to the calculation, our error term became smaller and smaller. However, as we added more and more processors to the mix, we did not achieve a linear Speedup. In other words, doubling the number of processors did not cut our computing time in half. We cannot attain a linear Speedup on a Beowulf Cluster such as ours. We will see that a linear Workup is possible: if we can complete a job in a certain amount of time, then we can complete twice as much work in that same amount of time if we also double the number of processors!

BTW, I've been offering my Computing Independent Study course to students who have completed AP Computer Science since the advent of Linux and powerful computing environments (such as SAGE, Octave and R) compatible with desktop PCs (late 1990s). I originally modeled my course after AMS_596, a 1 credit course introducing parallel computing to graduate students taking a PhD in Numerical Methods at SUNY Stony Brook. By our meeting #8 we have covered roughly half a semester of AMS_596. Now I see that the Graduate Mathematics Department at SUNY Stony Brook offers a more robust 3 credit course, AMS_530, as a co-requisite to many of their courses in Computational Biology and Econometrics which are new PhD specialties that require the use and understanding of super computers.

Next month we can put everything we have learned about Message Passing Interface (MPI) and Raspberry PIs (RPIs) to the test! We will try our hands at plotting Mandelbrot fractals the same way we eased into myPI: writing a serial or sequential version first and then writing a parallel version. Maybe we can even play with Julia fractals as well as fractal zoom, POVRAY and Blender animations! In the months to come we will start working on the hardware side of our project again by scaling our Linux Cluster from 4 to 8 or more cores.

Last but not least, did you freeze your code base? All the code from this project, and several other coding projects I've been working on for my students over the past decade is stored on GitHub. Any active repositories on GitHub along with tons of open source software on the internet will be stored in the Arctic Code Vault at 2pm PST on 2/2/2020. All my repositories have stars and are active, so my code base will be stored for 1000 years so future generations can see what we are up to nowadays!

CIS(theta) 2019-2020

Meeting #7 (12/11/19):

Quadrature, Quadrature where fore art thou, Quadrature!

CH07: DECEMBER READING

This month we dedicated to dissecting flops.f which we ran during meeting #5 to stress our mini cluster of four cores. We found that at the heart of flops.f was a Riemann sum calculation for the area bounded by y=4/(1+x^2), y=0, x=0 and x=1 which should equal PI.

Sounds like Calculus, doesn't it? Well, it should because it's a numerical method called Quadrature (breaking up unknown areas into rectangles) to estimate non-rectangular areas aka definite integrals! What stresses our multi-core SMP RPI cluster is calculating millions of such rectangular areas in a short amount of time thereby estimating the value of PI.

Then we recalled that we did this exact same calculation last year in preCalculus Honors on SAGE. What we had to do was to pair down our SAGE code to plain python so we could run it on our RPIs. Of course, another option would be to install and import SYMPY for algebraic or symbolic manipulations and NUMPY for special list, aka array, algorithms which we are spoiled to have in SAGE by default.

In preCalculus Honors, we have three introductory Calculus units at the end of the year. We have a unit on Limits, then a unit on Derivatives and, last but not least, a unit on Integrals. This last unit on Integration includes the following discussion on Quadarture.

Below you will find 4 ScreenCasts using a TI-nSpire CX CAS to explain how to estimate integrals numerically.

Now you will find below another 4 related screencasts coding in SAGE!

Last, but not least, you will see below three sagelets from the videos above as well as the three pythonic code snipets we came up with such that the last one should run in plain vanilla python using IDLE or Trinket (we were developing in SAGE). This is the code we will run on the RPI and will try to convert to MPI4PY code next month.

CIS(theta) 2019-2020

Meeting #6 (11/27/19):

HelloWorld Serial & MPI, Finally!

CH06: NOVEMBER READING #2

Using Raspberry PIs as our development platform is a perfect match for our project. Since the operating system is based on Linux and Python is installed by default and we added MPI4PY to the mix, we can do everything we need to do from the commandline.

So, today we wrote two practice programs hello_serial.py and hello_mpi.py to test our setup. Whenever you install a new system and are learning a new environment, it is customary to write some simple programs to run to test your setup. HelloWorld programs are a great starting point when learning how to program in a new language as well. See the code and screen shots below for how to run these sample python programs.

The first line in each program

#!/usr/bin/python

is not a comment. This line tells the program where your python interpreter is installed. You may have to change this line if python is installed in a different directory. Also, this line is optional if you are not running your program as an executable file. To run this program simply type the following into your shell from the directory in which you stored hello_serial.py, your sample python program.

python hello_serial.py

If you want to make your program an executable file, type this:

chmod 755 hello_serial.py

then execute your program as root.

./hello_serial.py

The same can be accomplished with hello_mpi.py, our sample MPI4PY program.

mpirun np-4 python hello_mpi.py

or

chmod 755 hello_mpi.py

mpirun np-4 hello_mpi.py

NOTE01: The filenames turn green when you list the directory in your shell after changing the file permissions from 644 to 755.

NOTE02: 644 and 755 are in octal. The file permissions can be set to anything from 000 to 777, not that every combination makes sense!

644 = 110100100 or wr-r--r--

(ASCII file default)

755 = 111101101 or wrxr-xr-x

(executable file)

w = you have write privileges

r = you have read privileges

x = you can execute this file

1st octet = your permissions

2nd octet = group permissions

3rd octet = global permissions

NOTE03: mpirun np-4 means use all 4 processors (RPI 3+ have quadcores).

NOTE04: You can easily read and write these programs using any ASCII editor available from your commandline.

NOTE05: Here's a project similar to ours!

CIS(theta) 2019-2020

Meeting #5 (11/13/19):

Headless Horseman To The Rescue!

CH05: NOVEMBER READING #1

During this meeting we setup a Headless Server so as to control a Raspberry PI remotely over WiFi (Cellphone Hot Spot or Home Router). This setup frees up some space on our workbench since we don't need a monitor, keyboard or mouse so we are going wireless! Now I can use the monitor, keyboard and mouse of my Samsung Chromebook Plus (with Chrome OS and Google Play) as a dumb terminal! I was going to add a Bluetooth Keyboard and Mouse, but this solution is much better! What was old is now new again, I suppose. Anyone remember the DEC VT 100 dumb terminals?

See the screen shots below for our headless mode setup. BTW, all screen shots were taken with scrot app on the Chromebook running in headless mode! We used the following droid apps to connect over WiFi: Network Scanner, Mobile SSH and VNC Viewer. You only need a regular monitor, keyboard and mouse during the initial setup of your RPI since the SSH and VNC interfaces are disabled by default on each RPI.

Then we installed openMPI on our RPI (from the Raspberrian/Debian Linux repositories). We ran our benchmark program flops.f, written in FORTRAN77, to see how fast a single node might be. Our best result was nearly 750 MegaFLOP/s (0.75 GigaFLOP/s) running on all 4 cores of a single node.

BTW, we initially had difficultly installing openMPI, see the step by step screenshots below. We had to run in the terminal:

sudo apt-get update

sudo apt-get upgrade

again before installing (use sudo):

apt-get install openmpi-bin

apt get install libopenmpi-dev

apt-get install gfortran

apt-get install python-mpi4py

We installed gfortran to run flops.f and benchmark our quadcores this week.

Finally, we downloaded, compiled and executed flops.f from the Pelican HPC DVD project (see code below):

mpif77 -o flops flops.f

mpirun -np 4 flops

We're going to need mpi4py to code fractal graphs next week using openMPI and python. We can scale the cluster using openSSH to add more RPIs aka nodes to the cluster next month.

HEAD01: Enable SSH and VNC

HEAD02: Choose Your Keyboard

HEAD03: Set Your Locale

HEAD04: Set Your Timezone

HEAD05: Set Your WiFi Country Code

STEP01: apt-get install epic fail

STEP02: re execute apt-get update

STEP03: re execute apt-get upgrade

STEP04: install openmpi-bin

STEP05: install libopenmpi-dev

STEP06: install gfortran

STEP07: install python-mpi4py

STEP08: download flops.f

STEP09: compile flops

STEP10: execute flops and get 734 MFLOP/s

CIS(theta) 2019-2020

Meeting #4 (10/30/19): HDMI & ETH0!

CH04: OCTOBER READING #2

We finally got some HDMI and Ethernet cables. Now we have a USB Keyboard and USB mouse installed as well as a monitor via HDMI. We thought we'd have to set up WiFi, but we just used the Ethernet drop by the SmartBoard PC in the rear of our classroom. We switched on the power supply and finally got to boot up the Raspberian Desktop, a derivative of Debian Linux, as we had for many years prior to our WimpDoze lab running Ubuntu.

Next, we would have tried to install openMPI but spent forever just updating our Linux OS:

sudo apt-get update

sudo apt-get upgrade

We have now completed STEP0 (September) and STEP1 (October). Next, STEP2 (November) is all about openMPI! See our project summary below.

INTRODUCTION TO CLUSTERS:

0a) Administrativa

0b) Pelican HPC DVD

SETTING UP OUR LINUX CLUSTER:

1a) update/upgrade Linux on one RPI

1b) use a RPI as replacement desktop

2a) install/test openMPI on one RPI

2b) run benchmark program flops.f

3a) install/test openMPI/openSSH on 2 RPIs

3b) run benchmark program flops.f

4a) scale the cluster to 4 RPIs

4b) write our own mpi4py programs

USING OUR LINUX CLUSTER:

5a) programming hires mandelbrot fractals

5b) programming hires julia fractals

6) programming hires ray tracings

7) programming a fractal zoom movie

8) programming animated movie sequences

Pixel/Raspberian Desktop:

NOOBS/Raspberian Desktop:

CIS(theta) 2019-2020

Meeting #3 (10/16/19): RPI UnBoxing!

CH03: OCTOBER READING #1

We un-boxed all the stuff we got from BOCES and Donorschoose and figured out how to boot up one RPI per student. We are playing around with these micro-board PCs as replacement PCs at home until our next meeting. We tried to setup one RPI in the back of the room. We attached a USB mouse and keyboard. We added a power supply. But when it came time to attach a monitor we found that IT had upgraded all our monitors such that none of them had VGA ports. However, they do have HDMI ports which we didn't have last year. That's good, as the RPI has a HDMI port, so all we need to get for the next meeting is some HDMI cables!

Raspberry Pi 3B:

USB Power Supplies:

NOOBS Sims:

HDMI To VGI Converters:

CIS(theta) 2019-2020

Meeting #2 (09/25/19): PelicanHPC!

CH02: SEPTEMBER READING #2

We downloaded the latest pelicanHPC ISO and burned a DVD for each of us. Then we booted our PCs from the DVD drive and ran openMPI from RAM. We used flops.f to test our "clusters." flops.f is a FORTRAN program that uses mpirun to stress a cluster by calculating PI using Riemann Sums for 1/(1+x^2) from a=0 to b=1.

BTW, I call our PCs "clusters" since they have hexacore processors and openMPI can run on multicore just as well as on a grid. We can't set up a grid based (multinode) Linux cluster as we are not allowed to setup our own DHCP server anymore. We got about 500 MegaFLOP/s per core, so 3 GigaFLOP/s per PC. If we could setup our own DHCP server, we'd get 150 cores running in parallel for about 75 GigaFLOP/s!

Compile:

mpif77 -o flops flops.f

Execute multicore:

mpirun -np 4 flops

Execute multinode:

mpirun -np 100 --hostfile machines flops

Enter pelicanHPC as a our first solution! We demoed an old DVD we had to show how to fire up the cluster. Our experiment demonstrated that we could not boot the whole room anymore, as we used to, since PXE Boot or Netboot requires we setup our own DHCP server. When you boot the DVD on one PC, it sets up a DHCP server so all the other PCs can PXE Boot the same OS over Ethernet running in RAM. However, our new WimpDoze network uses its own DHCP server. These two servers conflict, so we cannot reliably connect all the Worker bees to the Queen bee. We can't setup grid computing or a grid cluster, but we can still setup SMP. In other words, boot up a single PC with the pelicanHPC DVD and run multicore applications on all the cores on that one PC.

So, here's your homework. Download the latest pelicanHPC ISO file and burn your own bootable DVD. Don't worry if your first burn doesn't boot. You can use that DVD as a "Linux Coaster" for your favorite beverage the next time you play on SteamOS. If you can make this work at home, try to run Hello_World_MPI.py from John Burke's sample MPI4PY (MPI for Python) code.

See below for our Raspberry PI project. We have been waiting for funding for some extra hardware from DonorsChoose and we just got it! Yeah! In the mean time we're playing with PelicanHPC and BCCD DVDs to see how openMPI works so we can set it up the same way on our new Linux Cluster.

We've decided to make a Linux Cluster out of Raspberry Pi single board computers! Our school district has been kind enough to purchase 25 RPIs plus some USB and Ethernet cabling, so now we just need some power supplies, routers and SD cards. So here comes DonorsChoose to the rescue! We started a campaign to raise the money to purchase all the remaining equipment from Amazon!

What we want to do is to replace our Linux Lab of 25 quadcore PCs, where we used to do this project, with 25 networked RPI 3.0s. The Raspbian OS is a perfect match for our project! Raspbian is Linux based just like our old lab which was based on Ubuntu Linux. Also, python is built-in so we can just add openSSH and openMPI to code with MPI4PY once again! With the NOOB SD card, we start with Linux and python preinstalled!

Once we get all the hardware networked and the firmware installed, we can install an openMPI software stack. Then we can generate Fractals, MandelZooms, POV-Rays and Blender Animations!

CIS(theta) 2019-2020

Meeting #1 (09/11/19): Administrativa!

CH01: SEPTEMBER READING #1

(0) What Is CIS(Theta)?

CIS stands for our Computing Independent Study course. "Theta" is just for fun (aka preCalculus class). Usually, I refer to each class by the school year, for example CIS(2019). I've been running some sort of independent study class every year since 1995.

In recent years our independent study class has been about the care and feeding of Linux Clusters: How to Build A Cluster, How To Program A Cluster and What Can We Do With A Cluster?

BTW, Shadowfax is the name of the cluster we build! FYI, we offer 4 computing courses:

CSH: Computer Science Honors with an introduction to coding in Python using IDLE, VIDLE and Trinket,

CSAP: AP Computer Science A using CS50 and OpenProcessing,

CIS: Computing Independent Study using OpenMPI and

CSL: Computing Science Lab which is a co-requisite for Calculus students using Computer Algebra Systems such as SAGE.

(1) Wreath of the Unknown Server: We visited our LAST ever Linux ssh/sftp server, Rivendell, which is still in the Book Room, though dormant. Yes, I'm afraid it's true, all my Linux Boxes have been replaced with WimpDoze!

(2a) Planning: So we have to find an alternative to installing MPI on native Linux! We're thinking Raspbery PIs?

(2b) Research: How do we run MPI under WimpDoze without installing anything? How about MPI4PY? What about Raspberian?

(2c) Reading: In the mean time, here's our first reading assignment.

(3) Display Case Unveiled: We took down a ton of fractal prints and ray tracings from Room 429 to the 2 display cases on the 1st floor near the art wing. We decorated both display cases as best we could and left before anyone saw us. Must have been gremlins. BTW, we also have a HDTV with Chromecast to showcase student work here.

(4) NCSHS: We're going to continue our chapter of the National Computer Science Honor Society. We talked about the requirements for membership and how we started a chapter. Each chapter is called "Zeta Omicron something." We're "Zeta Omicron NY Hopper." This is a pretty new honor society. The first few chapters were called Zeta Omicron Alpha and Omicron Zeta Beta. We have the first NYS chapter! BTW, NCSHS is not to be confused with my Calculus class and the CML. I am also the advisor for the Continental Mathematics League Calculus Division which is like Mathletes with in-house competitions about Calculus. CML is an international competition where we usually place in the top 3 or 4 schools in the TriState. We've been competing for several years!

NEW SMARTBOARD SETUP

NOTE: MIC FOR SCREENCASTING!

NOTE: TI nSPIRE CX CAS EMULATOR!!

NEW DECOR IN THE REAR OF ROOM 429

NOTE: SLIDERULE!

NOTE: OLD LINUX SERVERS!!

NEW TAPESTRIES IN ROOM 429

NEW VIEW FROM LEFT REAR SIDE

NOTE: OLD UBUNTU LINUX DESKTOP!

NEW VIEW AS YOU WALK IN

NOTE: SIDERULE!

====================

CIS(theta) Membership Hall Of Fame!

(alphabetic by first name):

CIS(theta) 2019-2020:

AaronH(12), AidanSB(12), JordanH(12), PeytonM(12)

CIS(theta) 2018-2019:

GaiusO(11), GiovanniA(12), JulianP(12), TosinA(12)

CIS(theta) 2017-2018:

BrandonB(12), FabbyF(12), JoehanA(12), RusselK(12)

CIS(theta) 2016-2017:

DanielD(12), JevanyI(12), JuliaL(12), MichaelS(12), YaminiN(12)

CIS(theta) 2015-2016:

BenR(11), BrandonL(12), DavidZ(12), GabeT(12), HarrisonD(11), HunterS(12), JacksonC(11), SafirT(12), TimL(12)

CIS(theta) 2014-2015:

BryceB(12), CheyenneC(12), CliffordD(12), DanielP(12), DavidZ(12), GabeT(11), KeyhanV(11), NoelS(12), SafirT(11)

CIS(theta) 2013-2014:

BryanS(12), CheyenneC(11), DanielG(12), HarineeN(12), RichardH(12), RyanW(12), TatianaR(12), TylerK(12)

CIS(theta) 2012-2013:

Kyle Seipp(12)

CIS(theta) 2011-2012:

Graham Smith(12), George Abreu(12), Kenny Krug(12), LucasEager-Leavitt(12)

CIS(theta) 2010-2011:

David Gonzalez(12), Herbert Kwok(12), Jay Wong(12), Josh Granoff(12), Ryan Hothan(12)

CIS(theta) 2009-2010:

Arthur Dysart(12), Devin Bramble(12), Jeremy Agostino(12), Steve Beller(12)

CIS(theta) 2008-2009:

Marc Aldorasi(12), Mitchel Wong(12)

CIS(theta) 2007-2008:

Chris Rai(12), Frank Kotarski(12), Nathaniel Roman(12)

CIS(theta) 1988-2007:

A. Jorge Garcia, Gabriel Garcia, James McLurkin, Joe Bernstein, ... too many to mention here!

====================

Sincerely,

A. Jorge Garcia

Applied Math, Physics and CS

http://shadowfaxrant.blogspot.com

http://www.youtube.com/calcpage2009

Applied Math, Physics and CS

http://shadowfaxrant.blogspot.com

http://www.youtube.com/calcpage2009

Well, that's all folks!

Happy Linux Clustering,

A. Jorge Garcia

Applied Math, Physics & CompSci

PasteBin SlideShare

(IDEs)

MATH 4H, AP CALC, CSH: SAGECELL

(IDEs)

MATH 4H, AP CALC, CSH: SAGECELL

CSH: SAGE Server

CSH: Trinket.io

APCSA: c9.io

(Curriculae)

CSH: CodeHS

CSH: Coding In Python

CSH: Interactive Python

APCSA: Big Java

APCSA: Nature Of Code

No comments:

Post a Comment